Data Engineering Digest, September 2024

Hello Data Engineers,

Welcome back to another edition of the data engineering digest - a monthly newsletter containing updates, inspiration, and insights from the community.

Here are a few things that happened this month in the community:

Reaching parity in the open table format wars.

Why Kubernetes is not an essential skill for DE.

How much should DEs study OLTP systems?

New end-to-end Azure Databricks x Fabric architecture guide dropped!

Decoupling tiered storage from Kafka by Pinterest engineering.

A deep-dive into Netflix’s Key-Value data abstraction layer.

Community Discussions

Here are the top posts you may have missed:

1. Are the differences between Delta Lake and Apache Iceberg fading away?

Have Iceberg and Delta Lake become almost the same thing? Obviously they work differently under the hood (manifest files vs Delta Log), but do their differences still mean something. Or have they just converged on one level, but are still different enough if you look underneath?

Apache Iceberg and Delta Lake are competing open table formats which are gaining momentum with the rise of the data lakehouse movement. As a reminder, open table formats are file formats which enable features such as time travel, schema evolution, and ACID compliance for data lakes. While these two formats have been previously farther apart in regards to features, competition has spurred major moves towards convergence. What does this mean for data engineers and how can you choose which format to invest in?

💡 Key Insight

Data engineers invested in lake houses have been closely monitoring the intense rivalry between Apache Iceberg and Delta Lake. Databricks made headlines over the summer when they announced that they had acquired Tabular, the original creators of Iceberg, for over 1 billion dollars (Snowflake and Confluent were also bidding on Tabular). Presumably, this was to take business from Snowflake which currently embraces Iceberg (note that Snowflake did not create Iceberg despite the name). From a historical perspective, this was an interesting choice considering Delta Lake was originally created by Databricks and Databricks continues to be the main contributor.

With heavy competition underway, any feature set gaps are closing quickly. On the surface they may even seem convergent, but the underlying architecture and design choices still hold significance. For example, Delta Lake's tight integration with Spark may make it a preferred choice for organizations heavily invested in the Spark ecosystem while Apache Iceberg’s architecture using manifest files makes it more flexible and better enables multi-engine support. Regardless of which format you may choose, the end result for data engineers is that you can now mix and match compute without vendor lock-in and without having to move your data first.

The choice between the two will likely ultimately be based on the tool integrations you need and what tools you already have at your organization. It’s also worth noting that projects like Delta Universal Format (UniForm) and Apache X Table are working to close the gaps on interoperability by allowing you to convert from one format to another.

2. Are Kubernetes Skills Essential for Data Engineers?

Kubernetes (often referred to as K8s) is an open-source container orchestration tool which means it’s a tool used to automate the deployment, management, scaling, and networking of containers. Kubernetes is the industry’s most popular option but there are a few others such as Docker Swarm and Apache Mesos. Rat Hat OpenShift is another flavor of Kubernetes with enterprise grade security features and pre-built configuration settings and is also extremely popular for large companies. Understanding Kubernetes can allow data engineers to deploy, scale, and manage data applications without having to constantly rely on a DevOps team.

💡 Key Insight

TL;DR - Kubernetes is not an essential skill for data engineers to know. At least not right now.

Kubernetes is a fantastic tool for managing large-scale deployments and unpredictable workloads. However, it also adds additional complexity and requires expertise in building infrastructure and networking to use effectively. Several data engineers said plainly that most companies do not face the scale or bursty workloads (meaning sudden spikes in data volumes) that justifies the additional labor and maintenance required to support it (though some companies may take on that challenge regardless).

The built-in autoscaling of most other managed container services is likely a better choice for the majority of organizations. One major caveat however is that the quantity and complexity of data is increasing rapidly every year.

3. Do you think data engineers need to spend much time learning OLTP systems?

OLTP systems are often the source of the data that data engineers work with in OLAP systems. While DEs are not usually responsible for OLTP systems, it can still be beneficial to spend some time understanding how they work as upstream changes can directly affect your work.

💡 Key Insight

It’s a recurring joke in the data engineering community that DEs are often given a hot mess of data and only through herculean efforts and band-aid solutions does it become usable. This is at least partly due to the fact that application development tends to prioritize immediate functionality, without always considering the downstream implications for data.

As a data engineer, you probably don’t have to become an expert in OLTP systems but engineers that have a foundational knowledge of both OLTP and OLAP systems can improve both by collaborating with application development teams to design performant schemas that also make downstream data pipelines more efficient.

One member also pointed out that databases used typically for OLTP workloads (e.g. PostgreSQL and MySQL) are also often used for data warehousing at smaller scales. Overall, understanding the basics is time well spent, but the inner complexities and in-depth knowledge is typically not necessary.

Industry Pulse

Here are some industry topics and trends from this month:

Data Intelligence End-to-End with Azure Databricks and Microsoft Fabric

A new architecture guide was published this month by Azure in conjunction with Databricks.

The Data Intelligence End-to-End Architecture provides a scalable, secure foundation for analytics, AI, and real-time insights across both batch and streaming data. The architecture seamlessly integrates with Power BI and Copilot in Microsoft Fabric, Microsoft Purview, Azure Data Lake Storage Gen2, and Azure Event Hubs, empowering data-driven decision-making across the enterprise.

Pinterest Tiered Storage for Apache Kafka: A Broker-Decoupled Approach

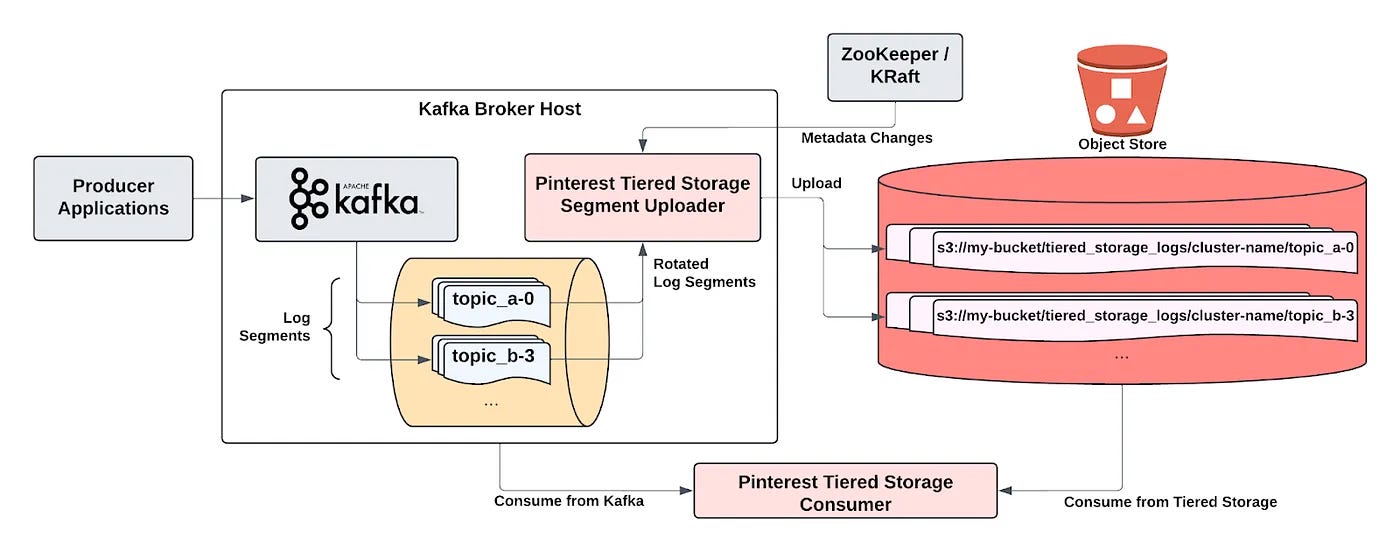

This month Pinterest shared their open-source implementation of Tiered Storage for Apache Kafka which is decoupled from the broker. By decoupling tiered storage from the broker, Pinterest’s implementation brings the benefits of storage-compute decoupling from MemQ to Kafka and unlocks key advantages in flexibility, ease of adoption, cost reduction, and resource utilization when compared to the native implementation.

Introducing Netflix’s Key-Value Data Abstraction Layer

Netflix released a deep dive into how their KV abstraction works, the architectural principles guiding its design, the challenges they faced, and the technical innovations that allowed them to achieve performance and reliability at a global scale.

🎁 Bonus:

🆕 AWS x Deeplearning.ai x Joe Reis released a free Data Engineering course & certification

🏎️ Up to 13x faster Polars with new GPU engine using NVIDIA RAPIDS cuDF

🗺️ “This is a nice map, great work. Can we export it to excel?”

📅 Upcoming Events

10/7-10/10: Coalesce Conference

10/22: Druid Summit

Share an event with the community here or view the full calendar

Opportunities to get involved:

Share your story on how you got started in DE

Want to get involved in the community? Sign up here.

What did you think of today’s newsletter?

Your feedback helps us deliver the best newsletter possible.

If you are reading this and are not subscribed, subscribe here.

Want more Data Engineering? Join our community.

Want to contribute? Learn how you can get involved.

Stay tuned next month for more updates, and thanks for being a part of the Data Engineering community.