Hello Data Engineers,

Welcome back to another edition of the data engineering digest - a monthly newsletter containing updates, inspiration, and insights from the community.

📢 Announcements 📢

We are hosting our first ask me anything (AMA) events next month with the Great Expectations team and Joseph Machado from startdataengineering.com. Don’t forget to click on the “Remind me” button on the AMA so you don’t miss when it goes live!

We are experimenting with a new industry pulse section in this newsletter. Please let us know what you think using the feedback buttons at the end.

Now back to the digest.

Here are a few things that happened this month in data engineering:

There’s no magic in handling deleted data.

How to build a data…pond?

Building real real-time dashboards.

Uber makes their data pipelines Sparkle.

The mind-blowing numbers behind Prime Day 2024.

Is Docker universally loved?

Community Discussions

Here are the top posts you may have missed:

1. Deletes in ETL

How do you all handle deletes in ETL? I need to replicate data from our operational database to a new location, and I feel that all solutions I look at come up short when faced with deletes. That is, the exception is log-based CDC, but this seems to mostly require streaming and is fairly expensive compared to batch?

Handling deleted data in data pipelines is a common task and some engineers are curious as to what all of the options are - here is our attempt to lay out the most commonly used options.

💡 Key Insight

First, we want to break this problem down into two parts: what you can do at the source and what you can do outside of the source. Let’s start at the source assuming you have access or influence to make changes.

Options for saving deleted data events:

Soft deletes: Instead of physically deleting records (also referred to as “hard deletes”), add a flag and a timestamp that represents when a record is deleted. Very common.

Audit tables: Purpose-built tables that are used to track changes to data you care about. This can be deletes but also include updates and new data. Typically powered by database triggers. May affect the source write performance but not uncommon.

Move deletes to a separate table. Probably not as common as the other options.

Options for capturing changes (deletes, inserts, and updates) when the previous options are unavailable:

Compare tables: load the full table and then on the next run compare the latest source data to it. Whatever is missing has been deleted.

The least efficient but simplest method. One data engineer pointed out that you could create a separate pipeline that only pulls in the natural key (primary key) and then compare that. May save some time/resources but avoid it if possible.

Incremental load: find the max value of a modified timestamp or auto incremented column (usually a primary key) then query the source for records greater than that value to find new and updated records. This method is very common but does not capture hard deletes.

Becomes inefficient at larger data volumes and puts an extra burden on the source database. At a certain data size, querying the source for changes may take too long/be too expensive and log-based CDC becomes more practical.

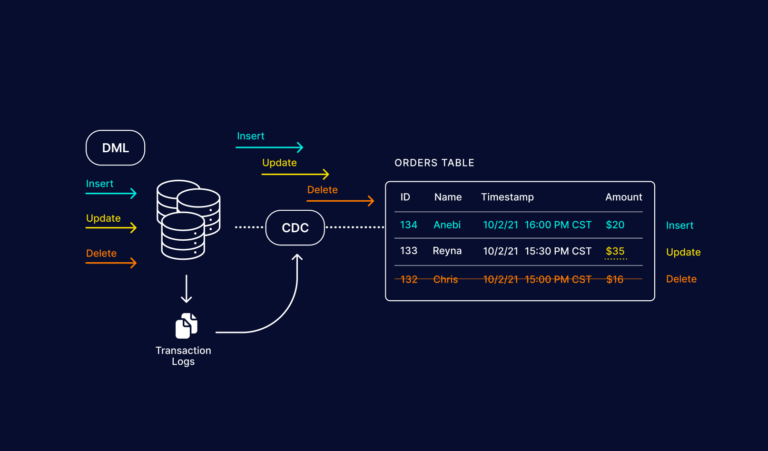

Log-based change data capture (CDC) - when data engineers use the term CDC they are usually referring to log-based CDC. This option involves using software that reads the source database logs for changes to tables and then sends that data somewhere you can ingest it (typically a stream).

At smaller scales, the other batch options will be cheaper because of the cost of constantly running a server with CDC software on it. At larger scales, it’s a practical option for capturing changes and doing so in real-time. It is a widely used technique in data engineering.

Recommended reading: Evaluating Change Data Capture Tools: A Comprehensive Guide

2. Best way to build a Small Data Lake? (<100GB)

One member wanted to know what tools you would use if you were building a regular data lake with the exception that the data is small enough to fit into the RAM of a single machine.

Data lakes/lakehouses can support a wide variety of data types and give you full control over how the data is managed which is why they are popular for large scale deployments. But maybe you know you are planning to scale so you want to plan ahead or you just want to learn how data lakes work even though you may not “need” one. It might also be interesting just to see what technology choices there are at a smaller scale and if they differ from what you would use at a larger scale.

💡 Key Insight

Data lakes are usually made up of a few key components: blob storage + a file format, compute, and a metadata catalog. Most of the parts that the community suggested were the same as you would use in a normal data lake but the main difference was what tool you would choose for compute. Instead of a distributed processing framework like Spark, you could instead use lighter-weight tools like polars or duckdb that are better suited for processing data on a single machine.

This is an example of why data lakes are useful. You can swap out just the parts you need. When you’re ready to scale you can swap out just the compute for a distributed processing option like Spark or Trino.

In reality, data lakes still take an upfront investment to set up and maintain and at smaller scales most data engineers agreed they would probably opt for a managed database solution that abstracts the infrastructure and configuration components, allowing them to focus on delivering business value.

3. How do you actually create real-time dashboards? (Not near real-time)

What would the end to end data architecture look like for a true real time dashboard?

One concept that every data engineer should understand is that “real-time” must be defined before an accurate recommendation can be given. Asking for real-time without defining what real-time means is like saying you want a number between 0 and 1 - without specifying how precise you need to be (0.99 vs 0.9999 vs 0.99999999…). The latency you aim for is what will determine the tools you choose, the resources allocated, and the tradeoffs you make in calculating metrics. Without clear boundaries it's hard to know exactly what you’re aiming for. In real-time streaming, each reduction in latency can increase costs multiple fold.

💡 Key Insight

The first insight from the community is that most data pipelines powering analytical use cases are not real-time because either they power dashboards/reports that aren’t monitored constantly or the metrics themselves aren’t actionable in real-time. A few use-cases where real-time analytics are actionable are areas like threat detection, fraud detection and emergency medical systems. Typically, these real-time pipelines power automated systems and aren’t directly used by humans.

If we do need to build a true real-time pipeline the first thing we need to do is define what the latency requirement is aka the end-to-end latency.

End-to-end latency = ingest latency + query latency

The fastest end-to-end latency will involve ingesting new data as soon as it is written, doing as few modifications as possible to calculate a metric, and sending it to a destination. The more steps in between or the more complicated the calculation the longer it will take. The speed it takes to get the data from the source can depend on the size of the events and the compute resources available. Here is an example:

Log-based CDC => stream (raw) -> stream processor -> stream (processed) => visualizationExample with tools:

Debezium => Kafka -> Flink -> Kafka => GrafanaGeneral guidelines:

Decrease ingest latency by

Separating ingest compute from query compute

Appropriately sizing your compute/choosing the right type of compute instance (e.g. memory optimized).

Limit data ingested to only the data you need.

Appropriately size your stream.

Decrease query latency by

Doing as few transformations as possible

Trade accuracy for speed using approximation methods like HyperLogLog. Read more about sketch algorithms and sampling.

Bonus article: Computing Accurate Percentiles with DDSketch

Industry Pulse

Here are some industry topics and trends from this month:

Sparkle: Standardizing Modular ETL at Uber

The data engineering team at Uber recently shared Sparkle - a data pipeline framework written on top of Apache Spark to simplify and standardize Spark pipeline development and testing. Sparkle provides boilerplate code and various source and sink integrations out of the box so that the data engineer can just focus on writing the business logic expressed in either SQL or Java/Scala/Python-based procedural blocks. With Sparkle, their team was able to increase code re-usability, improve developer productivity by 30%+ and achieve 100% data quality test coverage.

How AWS powered Prime Day 2024 for record-breaking sales

This year’s Amazon Prime Day (July 17-18th) was a record-breaking event and Amazon sold more items than any previous Prime Day event. The AWS team broke down some of the mind-blowing numbers related to the AWS services behind the event.

Thank You to the Stack Overflow Community for Ranking Docker the Most Used, Desired, and Admired Developer Tool

Docker is a popular tool in data engineering for running workloads and for local development when paired with dev containers. In Stack Overflow’s 2024 Developer Survey Docker was ranked the most-admired and ranked most-used tool in the other tools category.

Want to learn Docker in 2024? Check out our learning resources page for recommendations by other data engineers.

🎁 Bonus:

🏅 Several Olympics-inspired memes: Sr. Data Engineer vs excel guy and Senior vs. Staff Data Engineer

♟️ I made a data pipeline to help you get data from the Lichess database

📅 Upcoming Events

9/17-11/4: DataKind's DataKit Event

9/23-9/24: Small Data SF 2024

9/30-10/2: PGConf NYC 2024

Share an event with the community here or view the full calendar

Opportunities to get involved:

Share your story on how you got started in DE

Want to get involved in the community? Sign up here.

What did you think of today’s newsletter?

Your feedback helps us deliver the best newsletter possible.

If you are reading this and are not subscribed, subscribe here.

Want more Data Engineering? Join our community.

Want to contribute? Learn how you can get involved.

Stay tuned next month for more updates, and thanks for being a part of the Data Engineering community.

and The Journey continues Ripples....