Data Engineering Digest, July 2023

Hello Data Engineers,

Welcome back to another edition of the data engineering digest - a monthly newsletter containing updates, inspiration, and insights from the community.

Here are a few things that happened this month in the community:

Are there any good no-code data platforms?

What is GROUP BY 1 and when to use it.

Data Engineering headaches.

Unit testing implementations in dbt.

Will data engineering be the next data science?

The most common mistakes data engineers make and how to avoid them.

Community Discussions

Here are the top posts you may have missed:

1. What are the best no-code platforms you have come across?

One of our data engineers asked the community what they recommend for no-code data platforms. They mentioned an interest in Palantir Foundry, but are dissuaded by the high cost.

“Curious if anyone here has used Palantir's Foundry or other tools that give non-technical users a place to search and see data exists, do their data manipulation, exploration, and then visualization on both tabular and time series data.”

No-code tools in general are attractive to non-technical users and in theory, can make data work more accessible to more people in a company which executives also like. However, the tradeoff is usually that complex tasks can expose the limitations of the no-code platform and without a way to write your own custom code you end up with a workaround solution or no solution at all.

💡 Key Insight

Most of the community members provided a small collection of tools such as Domo, Dataiku, Wherescape, SSIS, and Alteryx but warned about the relative unsatisfactory nature of doing anything custom with these kinds of tools. The community also shared the sentiment that a platform that tries to do everything rarely does it as well as using single-purpose tools. One member states: “There are no good no-code tools. The only ones that benefit from no-code tools are those selling it.”

2. Why use "GROUP BY 1"?

A community member that is just learning dbt wondered why the training courses often used “GROUP BY 1”. Is this an odd convention that’s internally used or should you always use the column name in the group by clause?

It’s not just a dbt feature, it exists in SQL for a reason so it must be valuable but many data engineers don’t really know why it’s valuable or when to use it.

💡 Key Insight

One data engineer explained that the feature improves readability when the column you're grouping by isn't just a value but a complex expression, because it doesn’t require that you type it all out twice. Another reason to use it is because it makes your SQL robust to column name changes. However, most data engineers agreed that in general, you should still prefer grouping by the column name because it’s more explicit and you don’t have to worry about column order or remembering which number refers to which column when you have a lot of columns.

As a side note, https://courses.getdbt.com/collections is a fantastic resource that we highly recommend. dbt in particular invests plenty of resources and capital into educational content around their product, so unlike many older tech tools, their own content is one of the best educational resources available.

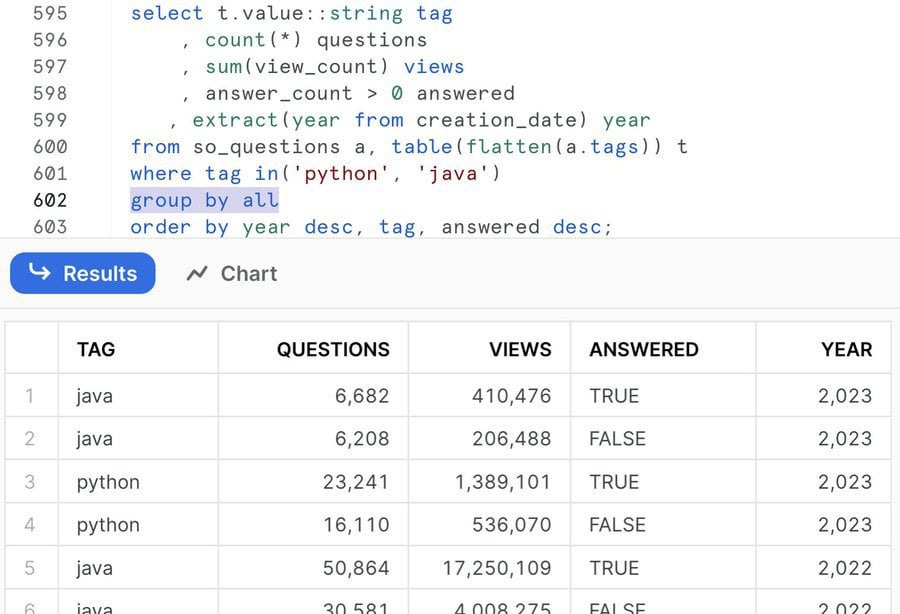

Second side note, Snowflake recently announced that you can now use GROUP BY ALL. 🤯

3. Data Engineering Headaches

“As a Data Engineer what’s your biggest headache, frustration, time suck?”

Many folks in the data engineering field dream about a dream job of isolation, filled with quiet mornings and no useless meetings. Unfortunately, this dream is rarely a reality and it can be a stressful job.

💡 Key Insight

The number one headache data engineers seemed to have was dealing with other people and managing stakeholder expectations. As with most “supportive jobs”, data engineers often need to help justify their worth to the business. If you are considering making a career switch to data engineering, or wondering if it’s worth taking on a more senior role in the space, being able to communicate effectively with stakeholders is often a very important part of being a quality data engineer.

Another common pain point was dealing with data sources data engineers have no control over such as an application database (hello MongoDB) the engineering team owns. The engineering team may iterate quickly and constantly be changing the schema without regard for how it’s currently being used which leads to a lot of frustration when your pipeline breaks and it’s not your fault.

4. What is your unit testing implementation?

“Curious to see what others use to unit test in the DE space. I work primarily on the transformation side, where we’ve picked up DBT…Unit testing within DBT is spotty at best, flat out doesn’t exist at worst.”

For data engineers, it’s less common to unit test SQL logic than it is to unit test Python code. Is it because of a lack of SQL expertise or is it just harder to unit test SQL in the first place?

💡 Key Insight

Before diving in, it’s worth distinguishing the difference between unit tests and data quality tests (or data validation checks).

Unit tests ensure that a function always returns an expected output given certain inputs. These kinds of tests can be run at any time and usually run as part of a series of tests within a CI/CD process. They are useful for catching bugs in your pipeline before they make it to production.

Data quality tests on the other hand test that the data moving through the pipeline meet certain expectations. These tests run after the pipeline has been deployed and catch issues with the data itself even if the pipeline is working as expected. In short, unit tests are for testing code and data quality tests are for testing the data.

Read our official guide: Testing Your Data Pipeline

For dbt in particular, some members shared how they do unit testing with custom macros while others have found dbt packages like dbt-mocktool or dbt-unit-testing that make it easier. But unlike traditional unit tests, you need to have static data in something like a CSV file (seed file for dbt) or a local database like duckdb.

5. Is data engineering the next data science?

Data Science has mainstream media adoption with generative ai and machine learning dominating the headlines. But while Data Engineering usually accompanies and supports Data Science, it’s often the forgotten lil bro when it comes to public awareness. One member asks if it’ll be Data Engineering’s chance to shine soon.

Similar to IT departments, it can be difficult for data engineers to get recognition for their work because there is less they can “show off.” Many data engineers feel like they are only acknowledged when something breaks which can lead to a negative perception of data engineers.

💡 Key Insight

Data engineers seem to agree that because of the nature of the work, it’s unlikely data engineering will ever gain the same popularity or recognition as data science. Data engineers themselves describe the work as “not sexy”, “plumbing” and “unglamorous” but essential. This is because the work is not directly tied to value creation for businesses. However, with the rise of AI and an ever-increasing amount of data being produced the future for data engineers is bright. Also, being an un-sexy job may actually be a great advantage for those who enjoy it because it makes it easier to find and keep a high-paying job.

Do you think data engineering is the “Charlie work” of software development?

6. What are some common data engineering mistakes you’ve seen in your career?

In data engineering, mistakes can be costly. Whether it’s not choosing the right tool for the job, or solving the wrong problem, nobody wants to be responsible for financial mistakes or wasting time. Seasoned data engineers weighed in on this discussion and ways you can avoid these mistakes as well.

💡 Key Insight

We compiled a list of the most popular mistakes data engineers make:

Building something custom instead of using an existing tool/framework.

Data engineering has been around for a while and many of the problems have existing solutions/patterns that you can use. There is still sometimes a need to build something custom but it shouldn’t be your first choice.

Not testing your code/pipeline before pushing changes to production.

Take time to understand the data and implement automated testing as part of your CI/CD process (or start one if you don’t have one).

Lack of exception handling and alerting for errors.

Data engineers must plan for failure and use patterns like exponential backoff retries and write-audit-publish to handle errors. But if you catch an error and no one gets alerted about it did it really happen?

Not making idempotent pipelines.

This is one of the core concepts all data engineers should know.

Lack of data modeling.

Just because newer databases have better performance doesn’t mean you can skip modeling. A good data model improves performance and makes it easier to understand the data. Long live data modeling!

Jumping into a new project that ends up not being useful and never gets used.

This one is a skill that data engineers must master over time. Talking to stakeholders to get requirements and asking questions to get to the root of the problem instead of taking requirements at face value. If you make this mistake, you’ll likely be frustrated for wasting time solving the wrong problem.

Last but not least, a common mistake that junior engineers make is thinking/treating non-technical folk like they are dumb.

Remember, you are good at what you do because you do it every day. Everyone has different skillsets and you shouldn’t expect other people you work with to know everything. The most successful data engineers are not only technically capable but expert communicators and can explain concepts in ways non-technical folks can easily understand without being condescending.

🎁 Bonus:

📅 Upcoming Events

8/21: MDS Fest 2023

8/29: Google Cloud Next 2023

Share an event with the community here or view the full calendar

What did you think of today’s newsletter?

Your feedback helps us deliver the best newsletter possible.

If you are reading this and are not subscribed, subscribe here.

Want more Data Engineering? Join our learning community or our professional community.

We’ll see you next month!