Hello Data Engineers,

Welcome back to another edition of the data engineering digest - a monthly newsletter containing updates, inspiration, and insights from the community.

Here are a few things that happened this month in the community:

Schema on read vs schema on write.

Would you choose Apache Spark today?

Why data engineers don’t test: according to Reddit.

The AI Agents Trend in DE.

Model Once, Represent Everywhere: Unified Data Architecture at Netflix.

Databricks: Introducing Apache Spark 4.0.

Community Discussions

Here are the top posts you may have missed:

1. Does anyone still think "Schema on Read" is a good idea?

…It's always felt slightly gross, like chucking your rubbish over the wall to let someone else deal with.

As the name implies, schema on read involves storing data “as-is” and then only applying a schema when using the data (typically via a query or view). It’s a practice commonly used with ELT (extract, load, transform) pipelines which have become more popular as the cost of storing data has plummeted over the past few decades.

While it’s a commonly used technique, it has its downsides and this data engineer hints at just one of the issues.

💡 Key Insight

Before getting into the tradeoffs, it’s important to highlight that schema on read is recommended to be used only in your raw layer / landing zone. Generally speaking, by the time data is going to be used for analysis or by another system your pipelines should be enforcing schema on write. The steps in between may be evaluated on a case by case basis.

Pros of schema on read:

Faster and flexible data ingestion because there is no schema enforcement. Great for diverse and rapidly changing data or data from sources that you have no control over like a public API.

New columns being added won’t break your pipeline.

Future-proof for unknown use cases. Retaining full raw data means you can revisit and reprocess it later for new questions or metrics without re-ingesting.

Cons:

It can reinforce the notion that the data producer doesn’t have to care about the effects of changes downstream.

Error detected later. Data issues are surfaced at query time instead of ingest time.

Slightly higher query latency/complexity. Since structure is applied at read time, queries may require more processing power and take longer to run.

At the end of the day, real world data is messy and businesses increasingly want insights faster which will continue to drive this trend.

2. How many of you are still using Apache Spark in production - and would you choose it again today?

There are a mix of underlying questions in this question: Is Spark still the best tool even though it’s old(er)? Do engineers use Spark because it’s the best tool or because it’s the popular tool? Why is Spark so popular when most businesses don’t have big data?

Spark has been around for over a decade in the distributed processing space and largely replaced map reduce systems. But technology has continued to improve and computers are cheaper and have more resources to process more data than ever before. There are several newer tools like Polars and DuckDB that allow you to process a lot of data on a single machine. Not having to deal with distributed processing simplifies the process and can translate into faster queries and lower maintenance costs.

💡 Key Insight

Many organizations are using Spark and many data engineers would still choose it today. The main reason is that even though in some situations an alternative may be more performant or cheaper - having a single tool that can handle the majority of use cases is super valuable. Spark can run on a single node, scale up for large distributed processing, and also handle streaming workloads.

Engineers also like that it’s open source and available in several managed services which helps avoid vendor lock-in. And because it’s widely used it’s easy to hire for which is another important non-technical consideration. This all fits into a larger trend we are seeing of tool fatigue and fragmentation due to the piecemeal approach of the “Modern data stack”. Data engineers are looking to simplify their stack.

📚 Related reading: recommended Spark learning resources and Why Apache Spark is often considered “slow".

3. Why data engineers don’t test: according to Reddit

Recently in the community there was a question and blog post that asked “Why don’t data engineers test like software engineers do?” It became popular with a lot of discussion so the author posted a follow up which was also insightful.

Even though many data engineers consider the profession a subset of software engineering, historically there has been a major cultural and technical gap between the two when it comes to automated testing. Despite building pipelines that feed critical analytical and machine learning systems, data engineers often lack consistent testing practices.

💡 Key Insight

There are two main types of tests data engineers use to test their data pipelines: unit tests and data quality tests. Unit tests are used to test code, such as data pipeline functions and classes. Data quality tests, on the other hand, are used to check the accuracy of data flowing through your pipeline.

Related reading: DE Wiki Guide to Testing Your Data Pipeline

Overall, testing is important but data engineers often feel that testing data sources that are unpredictable lessens the value of tests. Testing SQL (which many pipelines use) has also historically been difficult but now there are tools like SQLMesh and dbt that have made it easier.

There is also a spectrum of pipelines that power mission critical applications and those that power a dashboard that no one looks at. This is why the first step in testing is identifying the most important pipelines and focusing your efforts there.

What do you think? Join the conversation.

Industry Pulse

Here are some industry topics and trends from this month:

1. The AI Agents Trend

Databricks & Snowflake both acquire managed PostgreSQL vendors

Last month Databricks acquired Neon for ~1 Billion and this month announced its new Lakebase database powered by the Neon technology. This month Snowflake joined the fray with an intent to acquire Crunchy Data valued at ~$250M and a plan to launch Snowflake Postgres. Both companies hinted that these acquisitions are in part to power workloads run by AI agents which is a trend we’ve been seeing recently in the M&A area.

Other recent AI + data driven acquisitions:

Salesforce acquired Informatica for 8 Billion last month to power Agentic AI.

Alation acquired Numbers Station (data catalog & AI startup for data querying) in May to power Agentic workflows.

ServiceNow acquired Data.world (AI Agents/Automation & metadata/catalog) in May to power AI agents and workflows.

AI-Assisted Data Engineering

Cube.dev released D3 - an analytics agent that uses Cube’s semantic layer product to talk to your data.

Databricks Agent Bricks - a no-code platform for building AI agents and Lakeflow Designer which is a no-code ETL tool with an AI-first dev experience. They also announced they are open-sourcing their Declarative Pipelines API for Spark.

Snowflake launched Semantic Views which their AI agent can leverage and AISQL which increases their ML function capabilities.

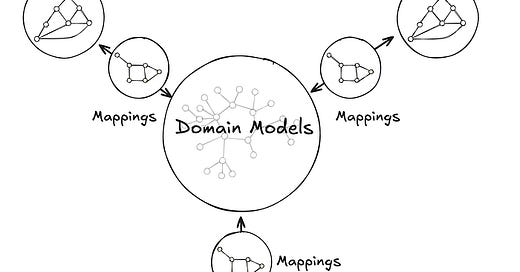

2. Model Once, Represent Everywhere: UDA (Unified Data Architecture) at Netflix

Netflix’s UDA (Unified Data Architecture) is a knowledge-graph-based control plane that enables data engineers to define core business entities - like Actor, Movie, Show - once and project them across multiple systems. This ensures consistency, discoverability, and extensibility across distributed services. When a domain model evolves - say, adding a new attribute - all downstream pipelines and APIs stay aligned, reducing duplication, inconsistent terminology, and data quality issues

3. Databricks: Introducing Apache Spark 4.0

Apache Spark 4.0 marks a major milestone in the evolution of the Spark analytics engine. This release brings significant advancements across the board – from SQL language enhancements and expanded connectivity, to new Python capabilities, streaming improvements, and better usability. Spark 4.0 is designed to be more powerful, ANSI-compliant, and user-friendly than ever, while maintaining compatibility with existing Spark workloads. In this post, we explain the key features and improvements introduced in Spark 4.0 and how they elevate your big data processing experience.

🎁 Bonus:

📅 Upcoming Events

No events - check back next month!

Share an event with the community here or view the full calendar

Opportunities to get involved:

Want to get involved in the community? Sign up here.

What did you think of today’s newsletter?

Your feedback helps us deliver the best newsletter possible.

If you are reading this and are not subscribed, subscribe here.

Want more Data Engineering? Join our community.

Want to contribute? Learn how you can get involved.

Stay tuned next month for more updates, and thanks for being a part of the Data Engineering community.